Time Series Analysis in Python - Introduction to ARIMA

What is Time Series?

If we want to predict something in future i.e. stock prices, sales etc, we use time series analysis over past data. We can predict daily stock price, weekly interest rates, sales figure etc where the outcome varies over time. In such scenarios, we use time series forecasting.

A time series is a set of observation taken at specified times usually at equal intervals. It is used to predict future values based on the previously observed values.

By time series analysis we not only predict the future values but also able to understand past behavior, plan for the future and evaluate current accomplishment.

Componets of Time Series

-

Trend:

- A trend exists when there is a long-term increase or decrease in the data. It does not have to be linear. It could go from an increasing trend to a decreasing trend.

- There is a trend in the antidiabetic drug sales data shown below.

Source: otext.org

Source: otext.org

-

Seasonality:

- A seasonal pattern occurs when a time series is affected by seasonal factors such as the time of the year or the day of the week.

- Seasonality is always of a fixed and known frequency.

- The monthly sales of antidiabetic drugs above show seasonality which is induced partly by the change in the cost of the drugs at the end of the calendar year.

-

Irregularity:

- It is also called residual.

- These are erratic in nature, unsystematic, basically happens for short durations and not repeating.

- Let’s take an example. If there is a sudden natural disaster i.e flood in a town out of nowhere, a lot of people buy medicine and ointment and after sometime when everything settles down, sales of those ointments might go down. Nobody knows how many numbers of sales are going to happen that time and also cannot force the event not to happen. These type of random variations are called irregularity.

-

Cyclic:

- A cycle occurs when the data exhibit rises and falls that are not of a fixed frequency.

- These fluctuations are usually due to economic conditions, and are often related to the “business cycle”. The duration of these fluctuations is usually at least 2 years.

When NOT to use Time Series?

Time series is not applicable when-

- values are constant such as y = c.

- values are in the form of a function, such as y = sin(x), y = log(x).

What is Stationarity?

- Time series has a particular behavior over time, there is a very high probability that it will follow the same in the future.

- A stationary time series is one whose properties do not depend on the time at which the series is observed.

- Time series with trends (varying mean over time), or with seasonality (variations of a specific time frame), are not stationary — the trend and seasonality will affect the value of the time series at different times.

- On the other hand, a white noise series is stationary — it does not matter when you observe it, it should look much the same at any point in time.

- Some cases can be confusing — a time series with cyclic behavior (but with no trend or seasonality) is stationary. This is because the cycles are not of a fixed length, so before we observe the series we cannot be sure where the peaks and troughs of the cycles will be.

In general, a stationary time series will have no predictable patterns in the long-term. Time plots will show the series to be roughly horizontal (although some cyclic behavior is possible), with constant variance.

Figure 2: Which of these series are stationary? (a) Google stock price for 200 consecutive days; (b) Daily change in the Google stock price for 200 consecutive days; (c) Annual number of strikes in the US; (d) Monthly sales of new one-family houses sold in the US; (e) Annual price of a dozen eggs in the US (constant dollars); (f) Monthly total of pigs slaughtered in Victoria, Australia; (g) Annual total of lynx trapped in the McKenzie River district of north-west Canada; (h) Monthly Australian beer production; (i) Monthly Australian electricity production. Source: otext.org

Figure 2: Which of these series are stationary? (a) Google stock price for 200 consecutive days; (b) Daily change in the Google stock price for 200 consecutive days; (c) Annual number of strikes in the US; (d) Monthly sales of new one-family houses sold in the US; (e) Annual price of a dozen eggs in the US (constant dollars); (f) Monthly total of pigs slaughtered in Victoria, Australia; (g) Annual total of lynx trapped in the McKenzie River district of north-west Canada; (h) Monthly Australian beer production; (i) Monthly Australian electricity production. Source: otext.org

Consider the nine series plotted in Figure 2. Which of these do you think are stationary?

Obvious seasonality rules out series (d), (h) and (i). Trends and changing levels rules out series (a), (c), (e), (f) and (i). Increasing variance also rules out (i). That leaves only (b) and (g) as stationary series.

At first glance, the strong cycles in series (g) might appear to make it non-stationary. But these cycles are aperiodic. In the long-term, the timing of these cycles is not predictable. Hence the series is stationary.

How to Remove Stationarity?

Following characteristics can be found in stationary time series. We have to get rid of those characteristics.

- Constant means according to the time

- Constant variance at different time intervals

- Autocovariance that does not depend on time i.e. correlation between values of any time interval t and their previous time intervals such as t-1, t-2 etc.

Tests to Check Stationarity

-

Rolling Statistics

- Plot the moving average or moving variance and see if it varies with time.

- It’s more of a visual technique, not suitable for production.

- It can be used as POC (proof of concept) purpose.

-

ADCF (Augmented Dickey-Fuller) Test

- The test starts with a null hypothesis that states that the time series is non-stationary, followed by some statistical results based on the hypothesis.

- The test results comprise of a Test Statistic Value and some Critical Values.

- We will go the rules that check stationarity in a time series later on in our example.

- More about this algorithm can be found here.

What is the ARIMA model?

- ARIMA stands for Auto-Regressive Integrated Moving Average

- ARIMA is one of the best models to work with time series data.

- Combination of two models, AR and MA

- AR (Auto-Regressive) model: Correlation between data in previous time steps and current time step. Parameterized with p, called autoregressive lags term.

- MA (Moving Average) model: Moving average to smoothen data and average the noises and irregularities. Parameterized with q, called moving average term.

- These two models are then integrated with a degree of differencing from previous p time intervals.

ACF and PACF

To find p and q, we have to determine PACF and ACF which are autocorrelation function and partial autocorrelation function respectively of a time series. ACF is used to find autocorrelation between timestamp t and t-k where k = 1, 2, …, t-1. This allows the model to predict according to the trend. Again, PACF is also determined for the same purpose but over residuals to smoothen out noises.

If you are interested to know some details of ACF and PACF, please refer to this link.

Example: Forecast Future

Build a model to forecast the demand or passenger traffic in airplanes. The data is classified in date/time and the passengers traveling per month.

| Month | #Passengers |

|---|---|

| 1949-01-01 | 112 |

| 1949-02-01 | 118 |

| 1949-03-01 | 132 |

| 1949-04-01 | 129 |

| 1949-05-01 | 121 |

Import necessary libraries

We will be using numpy for matrix manipulation, pandas for loading dataset from csv, matplotlib to visualize some data in different formats i.e. graphs, charts etc. Also, we will be using some other libraries to perform statistical analysis later on.

|

|

Load the dataset air-passengers.csv

First, we need to convert date strings as python date time format. Also, we want to select Month as the index of this data table.

|

|

Let’s print some data from the beginning of the table.

|

|

| Month | #Passengers |

|---|---|

| 1949-01-01 | 112 |

| 1949-02-01 | 118 |

| 1949-03-01 | 132 |

| 1949-04-01 | 129 |

| 1949-05-01 | 121 |

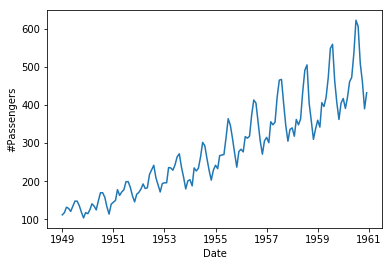

Let’s plot this data as a graph with matplotlib.

|

|

Check if the data is Stationary or not

We will be using both rolling statistics and ADCF test for this purpose.

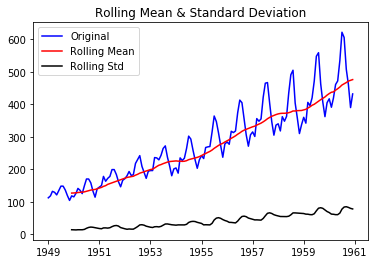

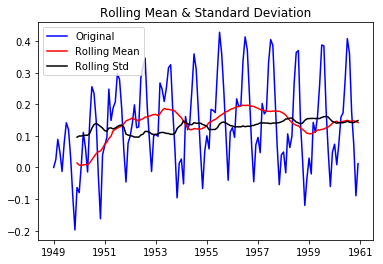

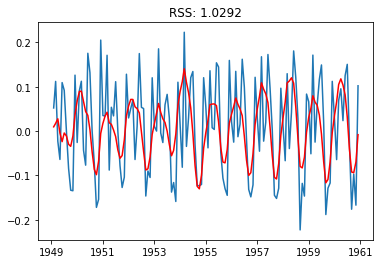

Determining Rolling Statistics

Since the dataset is monthly, we can set the rolling window as yearly (12 months), half-yearly (6 months) or even quarterly (4 months). We will be using 12 months or 12 rows for this case to determine the means, standard deviations and check if they are constant or not.

|

|

|

|

We will see that the first 11 rows have NaN values. We took means and standard deviations over every 12 rows from the beginning. Since these rows don’t fulfill the rolling window, we have got data starting from the 12th row.

Let’s plot these rolling statistics.

|

|

We can see that the means and standard deviations are not constant and we can say that this time series is not stationary.

Perform Dicky-Fuller Test

We will be using statsmodels library for this purpose. The adfuller test will give us the following data-

- Test Statistic

- p-value

- Number of lags used

- Number of observations used

If we don’t want to dive into the details of Dicky-Fuller algorithm and just want to know whether the time series is stationary or not from this test results, then we can see the summary of the algorithm below-

- If

- p-value > 0.05: The data is non-stationary.

- p-value <= 0.05: The data is stationary.

- If

- test statistic value is significantly less than critical values (possibly less than 1% of critical values): The data is stationary.

- test statistic value is greater than critical values: The data is non-stationary.

|

|

|

|

In summary, we can say that the data is non-stationary since from adfuller test we can see that p-value is greater than 0.05 and statistic value is greater than critical values.

Formalize Stationarity Checking in a Function

We can now formalize the stationarity checking scripts using a function test_stationarity. We will perform both rolling statistics and ADFC test to check stationarity.

|

|

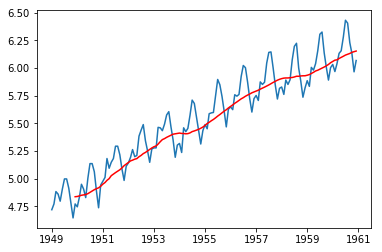

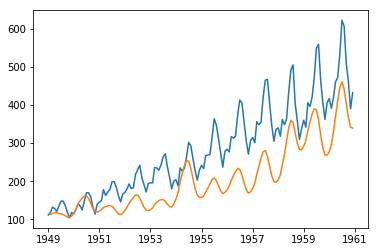

Make The Data Stationary

There are several ways to make the data stationary, but it totally depends on the data. For this case, we can take the log scale data and subtract moving average from that to make it somewhat stationary.

|

|

|

|

| Month | #Passengers |

|---|---|

| 1949-12-01 | -0.065494 |

| 1950-01-01 | -0.093449 |

| 1950-02-01 | -0.007566 |

| 1950-03-01 | 0.099416 |

| 1950-04-01 | 0.052142 |

| 1950-05-01 | -0.027529 |

| 1950-06-01 | 0.139881 |

| 1950-07-01 | 0.260184 |

| 1950-08-01 | 0.248635 |

| 1950-09-01 | 0.162937 |

| 1950-10-01 | -0.018578 |

| 1950-11-01 | -0.180379 |

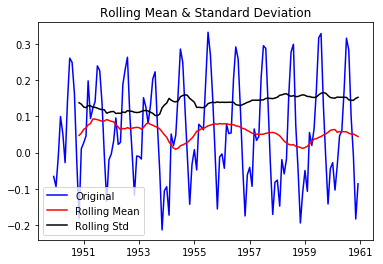

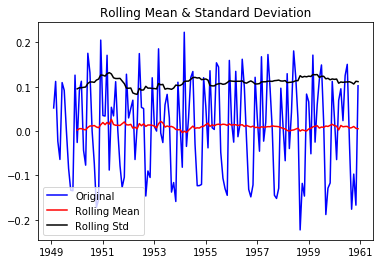

Now call this function with our newly transformed data.

|

|

|

|

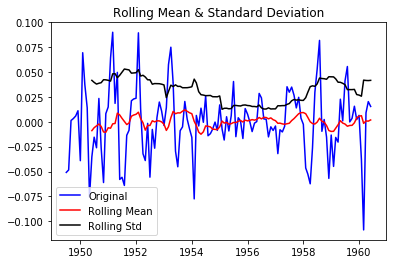

This is better than original and looks little bit stationary and adfuller test also suggests that this transformed series is stationary. Let’s do some other type of transformations to make it more stationary. We can start with subtracting exponentially weighted average from log scaled values and check again its stationarity.

|

|

|

|

So both of the above transformations can make the data stationary. Now let’s start working on finding p, d and q to feed in our ARIMA model.

First of all, let’s get first order differences.

|

|

|

|

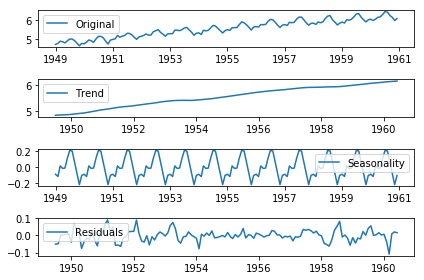

Decompose into Trend, Seasonality and Residual

Now, let’s decompose values into the trend, seasonal and residual components. We can do so using statsmodels.tsa.seasonal package.

|

|

Now, we can test that if the residual is stationary or not using our test_stationarity functiuon.

|

|

|

|

We can easily say that the residual is not stationary. Since MA component deals with residual, we have to transform this data to make it stationary, so that it smoothen it out to predict what will happen next. But for now, don’t worry about that since ACF and PACF curves will help us to find proper values of p and q, and feeding those into the ARIMA model will do the job for us.

Determine ACF and PACF

Now, we know the value of d. We have to calculate the values of p and q. We can find these values from ACF and PACF graph. Let’s plot those graph.

|

|

In order to calculate p and q, we have to check what is the value that cuts off its origin for the first time. Looking at PACF curve, we can see that at the time around 2, the first time curve cuts the horizontal axis, hence the value of p is 2 and similarly from ACF curve, we find that the value of q is also around 2.

Feed p, d, and q into ARIMA Model

We have found the values of p, d, and q, which are 2, 1 and 2 respectively. Let’s fit our ARIMA model with these values.

|

|

|

|

Check If Fitted Values Depicts Original Curve

Since we are working with first-order differences, we have to covert fitted values back to the real values. We went through the following sequence from given values to fitted values:

- Take logarithm of the values

- Take first-order differences of the values found in step no 1

So we have to reverse the process to predict the next values:

- Get back the first item from the log scaled values and place it at index 0 after shifting the fitted values once

- Take cumulative sum to get back logarithm scaled values

- Take exponentials of the values found in step no 2

|

|

If we don’t want to be bothered about all these conversions, well, we have a piece of good news. ARIMA model also can plot the fitted values efficiently, even for future time intervals.

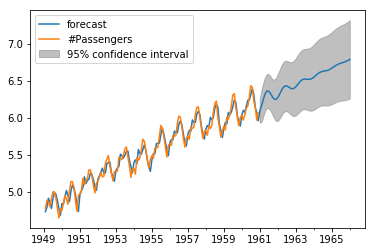

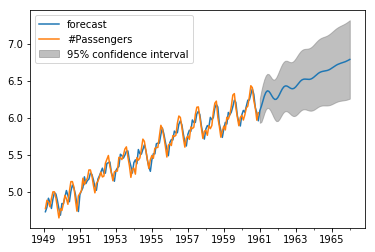

Predict and Plot Future Values Using The ARIMA Model

Now, our dataset has 144 rows, that means 12 years’ values. If we want to plot the graph for next 1 year, we have to call plot_predict() function from ARIMA model with parameters 1 (start from the first row) and 204 (first 144 + new 5x12=60 rows).

|

|

The forecast function from ARIMA model with parameter steps=60 will give us the new 60 values.

|

|

|

|

If you are looking for the full working code, here it is.

Acknowledgement: I took help from several popular online blogs and video tutorials like simplilearn, edureka, machinelearningmastery, otext, just to name a few. I rephrased some sentences and would like to give them full credits.